“When the states legalize the deliberate ending of certain lives… it will eventually broaden the categories of those who can be put to death with impunity.”—Nat Hentoff, The Washington Post, 1992

Bodily autonomy—the right to privacy and integrity over our own bodies—is rapidly vanishing.

The debate now extends beyond forced vaccinations or invasive searches to include biometric surveillance, wearable tracking, and predictive health profiling.

We are entering a new age of algorithmic, authoritarian control, where our thoughts, moods, and biology are monitored and judged by the state.

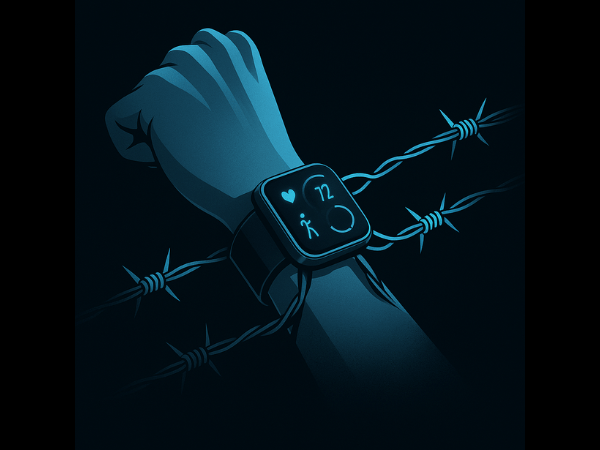

This is the dark promise behind the newest campaign by Robert F. Kennedy Jr., President Trump’s Secretary of Health and Human Services, to push for a future in which all Americans wear biometric health-tracking devices.

Under the guise of public health and personal empowerment, this initiative is nothing less than the normalization of 24/7 bodily surveillance—ushering in a world where every step, heartbeat, and biological fluctuation is monitored not only by private companies but also by the government.

In this emerging surveillance-industrial complex, health data becomes currency. Tech firms profit from hardware and app subscriptions, insurers profit from risk scoring, and government agencies profit from increased compliance and behavioral insight.

This convergence of health, technology, and surveillance is not a new strategy—it’s just the next step in a long, familiar pattern of control.

Surveillance has always arrived dressed as progress.

Every new wave of surveillance technology—GPS trackers, red light cameras, facial recognition, Ring doorbells, Alexa smart speakers—has been sold to us as a tool of convenience, safety, or connection. But in time, each became a mechanism for tracking, monitoring, or controlling the public.

What began as voluntary has become inescapable and mandatory.

The moment we accepted the premise that privacy must be traded for convenience, we laid the groundwork for a society in which nowhere is beyond the government’s reach—not our homes, not our cars, not even our bodies.

RFK Jr.’s wearable plan is just the latest iteration of this bait-and-switch: marketed as freedom, built as a cage.

According to Kennedy’s plan, which has been promoted as part of a national campaign to “Make America Healthy Again,” wearable devices would track glucose levels, heart rate, activity, sleep, and more for every American.

Participation may not be officially mandatory at the outset, but the implications are clear: get on board, or risk becoming a second-class citizen in a society driven by data compliance.

What began as optional self-monitoring tools marketed by Big Tech is poised to become the newest tool in the surveillance arsenal of the police state.

Devices like Fitbits, Apple Watches, glucose trackers, and smart rings collect astonishing amounts of intimate data—from stress and depression to heart irregularities and early signs of illness. When this data is shared across government databases, insurers, and health platforms, it becomes a potent tool not only for health analysis—but for control.

Once symbols of personal wellness, these wearables are becoming digital cattle tags—badges of compliance tracked in real time and regulated by algorithm.

And it won’t stop there.

The body is fast becoming a battleground in the government’s expanding war on the inner realms.

The infrastructure is already in place to profile and detain individuals based on perceived psychological “risks.” Now imagine a future in which your wearable data triggers a mental health flag. Elevated stress levels. Erratic sleep. A skipped appointment. A sudden drop in heart rate variability.

In the eyes of the surveillance state, these could be red flags—justification for intervention, inquiry, or worse.

RFK Jr.’s embrace of wearable tech is not a neutral innovation. It is an invitation to expand the government’s war on thought crimes, health noncompliance, and individual deviation.

It shifts the presumption of innocence to a presumption of diagnosis. You are not well until the algorithm says you are.

The government has already weaponized surveillance tools to silence dissent, flag political critics, and track behavior in real time. Now, with wearables, they gain a new weapon: access to the human body as a site of suspicion, deviance, and control.

While government agencies pave the way for biometric control, it will be corporations—insurance companies, tech giants, employers—who act as enforcers for the surveillance state.

Wearables don’t just collect data. They sort it, interpret it, and feed it into systems that make high-stakes decisions about your life: whether you get insurance coverage, whether your rates go up, whether you qualify for employment or financial aid.

As reported by ABC News, a JAMA article warns that wearables could easily be used by insurers to deny coverage or hike premiums based on personal health metrics like calorie intake, weight fluctuations, and blood pressure.

It’s not a stretch to imagine this bleeding into workplace assessments, credit scores, or even social media rankings.

Employers already offer discounts for “voluntary” wellness tracking—and penalize nonparticipants. Insurers give incentives for healthy behavior—until they decide unhealthy behavior warrants punishment. Apps track not just steps, but mood, substance use, fertility, and sexual activity—feeding the ever-hungry data economy.

This dystopian trajectory has been long foreseen and forewarned.

In Brave New World by Aldous Huxley (1932), compliance is maintained not through violence but by way of pleasure, stimulation, and chemical sedation. The populace is conditioned to accept surveillance in exchange for ease, comfort, and distraction.

In THX 1138 (1971), George Lucas envisions a corporate-state regime where biometric monitoring, mood-regulating drugs, and psychological manipulation reduce people to emotionless, compliant biological units.

Gattaca (1997) imagines a world in which genetic and biometric profiling predetermines one’s fate, eliminating privacy and free will in the name of public health and societal efficiency.

In The Matrix (1999), written and directed by the Wachowskis, human beings are harvested as energy sources while trapped inside a simulated reality—an unsettling parallel to our increasing entrapment in systems that monitor, monetize, and manipulate our physical selves.

Minority Report (2002), directed by Steven Spielberg, depicts a pre-crime surveillance regime driven by biometric data. Citizens are tracked via retinal scans in public spaces and targeted with personalized ads—turning the body itself into a surveillance passport.

The anthology series Black Mirror, inspired by The Twilight Zone, brings these warnings into the digital age, dramatizing how constant monitoring of behavior, emotion, and identity breeds conformity, judgment, and fear.

Taken collectively, these cultural touchstones deliver a stark message: dystopia doesn’t arrive overnight.

As Margaret Atwood warned in The Handmaid’s Tale, “Nothing changes instantaneously: in a gradually heating bathtub, you’d be boiled to death before you knew it.” Though Atwood’s novel focuses on reproductive control, its larger warning is deeply relevant: when the state presumes authority over the body—whether through pregnancy registries or biometric monitors—bodily autonomy becomes conditional, fragile, and easily revoked.

The tools may differ, but the logic of domination is the same.

What Atwood portrayed as reproductive control, we now face in a broader, digitized form: the quiet erosion of autonomy through the normalization of constant monitoring.

When both government and corporations gain access to our inner lives, what’s left of the individual?

We must ask: when surveillance becomes a condition of participation in modern life—employment, education, health care—are we still free? Or have we become, as in every great dystopian warning, conditioned not to resist, but to comply?

That’s the hidden cost of these technological conveniences: today’s wellness tracker is tomorrow’s corporate leash.

In a society where bodily data is harvested and analyzed, the body itself becomes government and corporate property. Your body becomes a form of testimony, and your biometric outputs are treated as evidence. The list of bodily intrusions we’ve documented—forced colonoscopies, blood draws, DNA swabs, cavity searches, breathalyzer tests—is growing.

To this list we now add a subtler, but more insidious, form of intrusion: forced biometric consent.

Once health tracking becomes a de facto requirement for employment, insurance, or social participation, it will be impossible to “opt out” without penalty. Those who resist may be painted as irresponsible, unhealthy, or even dangerous.

We’ve already seen chilling previews of where this could lead. In states with abortion restrictions, digital surveillance has been weaponized to track and prosecute individuals for seeking abortions—using period-tracking apps, search histories, and geolocation data.

When bodily autonomy becomes criminalized, the data trails we leave behind become evidence in a case the state has already decided to make.

This is not merely the expansion of health care. It is the transformation of health into a mechanism of control—a Trojan horse for the surveillance state to claim ownership over the last private frontier: the human body.

Because ultimately, this isn’t just about surveillance—it’s about who gets to live.

Too often, these debates are falsely framed as having only two possible outcomes: safety vs. freedom, health vs. privacy, compliance vs. chaos. But these are illusions. A truly free and just society can protect public health without sacrificing bodily autonomy or human dignity.

We must resist the narrative that demands our total surrender in exchange for security.

Once biometric data becomes currency in a health-driven surveillance economy, it’s only a matter of time before that data is used to determine whose lives are worth investing in—and whose are not.

We’ve seen this dystopia before.

In the 1973 film Soylent Green, the elderly become expendable when resources grow scarce. My good friend Nat Hentoff—an early and principled voice warning against the devaluation of human life—sounded this alarm decades ago. Once pro-choice, Hentoff came to believe that the erosion of medical ethics—particularly the growing acceptance of abortion, euthanasia, and selective care—was laying the groundwork for institutionalized dehumanization.

As Hentoff warned, once the government sanctions the deliberate ending of certain lives, it can become a slippery slope: broader swaths of the population would eventually be deemed expendable.

Hentoff referred to this as “naked utilitarianism—the greatest good for the greatest number. And individuals who are in the way—in this case, the elderly poor—have to be gotten out of the way. Not murdered, heaven forbid. Just made comfortable until they die with all deliberate speed.”

That concern is no longer theoretical.

In 1996, writing about the Supreme Court’s consideration of physician-assisted suicide, Hentoff warned that once a state decides who shall die “for their own good,” there are “no absolute limits.” He cited medical leaders and disability advocates who feared that the poor, elderly, disabled, and chronically ill would become targets of a system that valued efficiency over longevity.

Today, data collected through wearables—heart rate, mood, mobility, compliance—can shape decisions about insurance, treatment, and life expectancy. How long before an algorithm quietly decided whose suffering is too expensive, whose needs are too inconvenient, or whose body no longer qualifies as worth saving?

This isn’t a left or right issue.

Dehumanization—the process of stripping individuals or groups of their dignity, autonomy, or moral worth—cuts across the political spectrum.

Today, dehumanizing language and policies aren’t confined to one ideology—they’re weaponized across the political divide. Prominent figures have begun referring to political opponents, immigrants, and other marginalized groups as “unhuman”—a disturbing echo of the labels that have justified atrocities throughout history.

As reported by Mother Jones, J.D. Vance endorsed a book by influencer Jack Posobiec and Joshua Lisec that advocates crushing “unhumans” like vermin.

This kind of rhetoric isn’t abstract—it matters.

How can any party credibly claim to be “pro‑life” when it devalues the humanity of entire groups, stripping them of the moral worth that should be fundamental to civil society?

When the state and its corporate allies treat people as data, as compliance issues, or as “unworthy,” they dismantle the very notion of equal human dignity.

In such a world, rights—including the right to bodily autonomy, health care, or even life itself—become privileges doled out only to the “worthy.”

This is why our struggle must be both political and moral. We can’t defend bodily sovereignty without defending every human being’s equal humanity.

The dehumanization of the vulnerable crosses political lines. It manifests differently—through budget cuts here, through mandates and metrics there—but the outcome is the same: a society that no longer sees human beings, only data points.

The conquest of physical space—our homes, cars, public squares—is nearly complete.

What remains is the conquest of inner space: our biology, our genetics, our psychology, our emotions. As predictive algorithms grow more sophisticated, the government and its corporate partners will use them to assess risk, flag threats, and enforce compliance in real time.

The goal is no longer simply to monitor behavior but to reshape it—to preempt dissent, deviance, or disease before it arises. This is the same logic that drives Minority Report-style policing, pre-crime mental health interventions, and AI-based threat assessments.

If this is the future of “health freedom,” then freedom has already been redefined as obedience to the algorithm.

We must resist the surveillance of our inner and outer selves.

We must reject the idea that safety requires total transparency, or that health requires constant monitoring. We must reclaim the sanctity of the human body as a space of freedom—not as a data point.

The push for mass adoption of wearables is not about health. It is about habituation.

The goal is to train us—subtly, systematically—to accept government and corporate ownership of our bodies.

We must not forget that our nation was founded on the radical idea that all human beings are created equal, “endowed by their Creator with certain unalienable Rights,” among them life, liberty, and the pursuit of happiness.

These rights are not granted by the government, the algorithm, or the market. They are inherent. They are indivisible. And they apply to all of us—or they will soon apply to none of us.

The Founders got this part right: their affirmation of our shared humanity is more vital than ever before.

As I make clear in my book Battlefield America: The War on the American People and in its fictional counterpart The Erik Blair Diaries, the task before us is whether we will defend that humanity—or surrender it, one wearable at a time. Now is the time to draw the line—before the body becomes just another piece of state property.

Source: https://tinyurl.com/mr24w458

ABOUT JOHN W. WHITEHEAD

Constitutional attorney and author John W. Whitehead is founder and president of The Rutherford Institute. His most recent books are the best-selling Battlefield America: The War on the American People, the award-winning A Government of Wolves: The Emerging American Police State, and a debut dystopian fiction novel, The Erik Blair Diaries. Whitehead can be contacted at staff@rutherford.org. Nisha Whitehead is the Executive Director of The Rutherford Institute. Information about The Rutherford Institute is available at www.rutherford.org.

Publication Guidelines / Reprint Permission

John W. Whitehead’s weekly commentaries are available for publication to newspapers and web publications at no charge.